Hi, I am trying to replicate the Poisson random variable example using mixture function in which we deal with two multinomial random variables.

x <- rbind(t(rmultinom(100, 1, c(0.1, 0.2, 0.7))),

t(rmultinom(400, 1, c(0.2, 0.3, 0.5))))

head(x)

#> [,1] [,2] [,3]

#> [1,] 0 0 1

#> [2,] 0 0 1

#> [3,] 0 1 0

#> [4,] 0 0 1

#> [5,] 0 0 1

#> [6,] 0 0 1

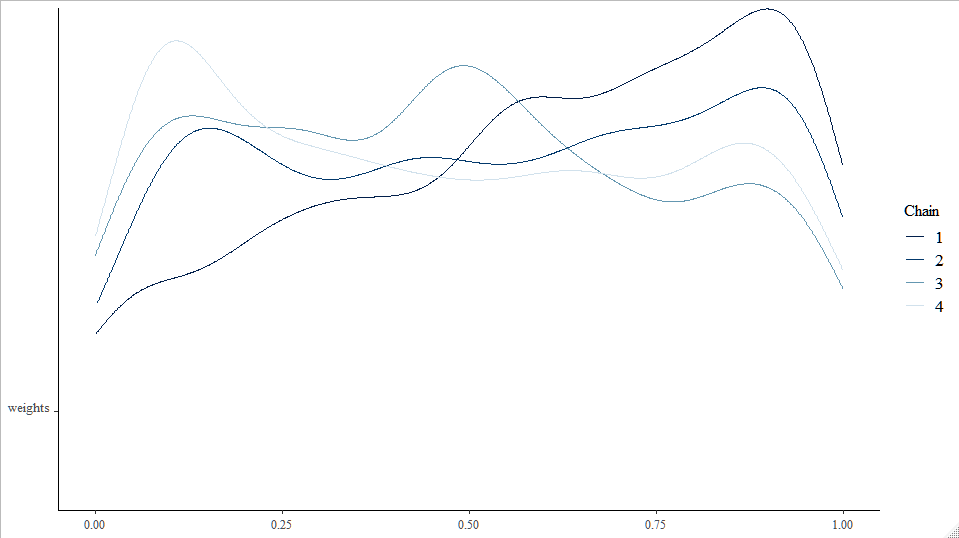

weights <- uniform(0, 1, dim = 2)

rates <- dirichlet(t(rep(1, 3)), n_realisations = 2)

distribution(x) <- mixture(multinomial(1, rates[1,]),

multinomial(1, rates[2,]),

weights = weights)

#> Error: left and right hand sides have different dimensions. The distribution must have dimension of either 500 x 3 or 1 x 1, but instead has dimension 1 x 3

I basically follow the same steps in the example and couldn’t figure out where I did it wrong. Can you help me with debugging this? Thank you.

BTW, I am a JAGS user for four years and just found this tool. It looks super cool and I regret not trying this earlier.