My code runs mcmc sampling multiple times for few datasets. The issue I am having is that R ends up using GBs of memory and brings the system to halt.

I am using the development version of greta.

I created a similar code to show what happens,

library(greta)

library(titanic)

library(pryr)

# function to run mcmc and get samples

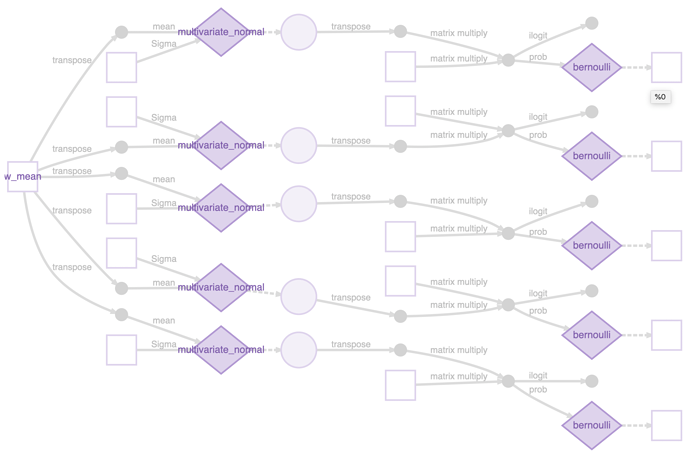

drawsample = function(X, y, w_mean, w_sigma){

# Prior

w = multivariate_normal(t(w_mean), w_sigma, n_realisations = 1)

# define distribution over output

linear = X %*% t(w)

p = ilogit(linear)

distribution(y) = bernoulli(p)

# define model

m = model(w)

# draw samples

draws <- mcmc(m, n_samples = 1000, chains = 1)

return(draws)

}

cols = c('Age', 'Parch', 'Fare', 'Survived')

data = titanic_train[cols]

data = data[complete.cases(data), ]

X = data[c('Age', 'Parch', 'Fare')]

y = data$Survived

num_features = 3

w_mean = zeros(num_features)

w_sigma = diag(num_features)

for(iter in 1:5){

print(mem_change(drawsample(X,y, w_mean, w_sigma)))

print(mem_used())

}

The output I get is like following,

warmup ====================================== 1000/1000 | eta: 0s

sampling ====================================== 1000/1000 | eta: 0s

169 kB

191 MB

warmup ====================================== 1000/1000 | eta: 0s

sampling ====================================== 1000/1000 | eta: 0s

816 kB

192 MB

warmup ====================================== 1000/1000 | eta: 0s

sampling ====================================== 1000/1000 | eta: 0s

588 kB

192 MB

warmup ====================================== 1000/1000 | eta: 0s

sampling ====================================== 1000/1000 | eta: 0s

775 kB

193 MB

warmup ====================================== 1000/1000 | eta: 0s

sampling ====================================== 1000/1000 | eta: 0s

1.18 MB

193 MB

What is the possible issue here?

EDIT: Upon further investigation, it seems to me that all the greta variables I define are leaking memory, and not just the mcmc function.